The natural question following the Saudi Arabian conference is “Who defines good?”

As you can see, the elite have big plans. They want to “reframe the dialogue” happening around “humanity’s pillars” going forward.

Every aspect of human life will be shaped by Machine Learning, and your life will depend on its success.

Download the detailed program PDF here to see for yourself what they’re saying.

- Tagline: “AI for the good of humanity”

- Theme: “Now, Next, and Never” — The forum asks how AI is currently being used, how it will be used going forward, and how it should never be used

- Futurists, ethicists, and scientists all repeated the same message of optimism, profitability, and exponential improvements that are impossible to stop

“Don’t be afraid, all will be good.”

That was the dry concluding remark by Prof. Dr. Jürgen Schmidhuber, the Scientific Director of Swiss AI Lab IDSIA and Co-Founder of NNAISENSE. Although delivered with more enthusiasm by fellow presenters, this was the common reassurance at the 2022 Global AI Summit in Riyadh, Saudi Arabia.

Perhaps this is what the room full of identically-dressed Arab men in the traditional Islamic white tunics and head scarves needed to hear. The event was advertised as being under the patronage of Crown Prince Mohammed bin Salman bin Abdulaziz Al Saud. The fact that the royal family was putting on the event speaks to the national priority of AI, smart cities, and keeping pace with the rest of the world in tech. Seen through the lens of global competition for investment dollars, engineers, and regulatory approval, the focus on positive reassurance makes sense.

Presenters from Europe, America, and Asia wore tailored suits and sometimes ties, while the Caucasian female host wore a pleated skirt and modest sweater, with short hair and a huge gaudy necklace. Everything was presented in English. But one can deduce that the audience—mostly wealthy Muslim royalty—came to the event wary of the dystopian possibilities. It may not have been quite as noticeable if the speakers themselves did not seem wearied by the skepticism, or the need to promise that AI would be used for good, not evil. For example, Dr. Kevin Knight and Dr. Sebastian Thrun seemed equally unimpressed with their shared role in the conference, which was to explain “how AI will augment humanity rather than replace it.” Their tone shifted between condescending and dismissive, or stiff and rehearsed, but they got the point across. AI will be the tool of the powerful, so don’t worry, they said.

With Saudi money on the table and a predetermined narrative to parrot, foreign experts gladly pitched AI as the next frontier of everything. It was humorous to hear professors and engineers directly acknowledge the fears and stigmas of science fiction, which have been dismissively joked about in the West. Popular fears are becoming more and more real, but here, mocking the skeptics is not good enough.

AI as a child you have to train

- Artificial Intelligence was compared to a small child that learns by being fed information, which grows up to be proficient at recognizing patterns and telling you what you want to know

- More data and processing power helps these “children” learn faster and gain better insights for humans to use

- Inputting skewed data leads to blind spots and discrimination

Scientists emphasized a clear distinction between the “science fiction” notion of self-aware robots that could replace mankind (called General Artificial Intelligence) and the analogy of the “trained child” (called Machine Learning, or just regular AI). Experts laughed at the idea of General Artificial Intelligence as a fantasy that could not happen, but emphasized the utility of a trained assistant for almost any occupation. Such tools would never replace mankind or conquer them, but enhance what people already do. Personally I believe this is true, and I’ve been saying it for ten years; there will not be an “awakening” but there will be more powerful tools in the hands of the same corrupt people. Of course, here we are meant to believe that the tools will be in the hands of the good guys—whoever they are.

According to the presenters, AI can be cross-trained to do similar tasks, such as a French translation AI learning Spanish more easily. But there were also funny examples of discrimination (which were spoken of in serious tones) such as an image search for “professional hairstyles” that apparently discriminated against certain ethnicities’ natural hair types, or a mask-detecting AI that was only effective at recognizing white men because the engineers who created the program only trained the AI using their own faces. The message to the Saudis was to include women and minorities they wouldn’t normally deign to involve, not because they value their contributions and need their insights, but because they might otherwise train their AI children to discriminate against their own kind and be blind to foreigners. This also explains why Google and other progressive companies have forcefully “retrained” their AI to force politically correct results into searches.

AI thought experiments

A speech from SenseTime CEO Xu Li argued that AI must be allowed to invent its own uses. He said researchers cannot impose their ideas onto the AI, but should let it take unexpected paths and perform its own “thought experiments.” He argued that scientists still don’t understand how exactly airplanes can fly using theoretical physics, but since it works in testing and industrial application they have no choice but to accept it as a science and build an industry around it. Likewise, he said AI will perform routines we don’t understand, but that can be exploited and built upon. In essence, we will become less reliant on human engineers to propose crazy ideas.

Quantum Computing

“It really is like science fiction.” – Scott Crowder, Vice President of IBM Quantum

One of the most exciting presentations for me was about Quantum Computing. As I wrote in my book Fire In The Rabbit Hole, it’s one of those technologies that seems more like an elaborate fraud than a real frontier. Without exception, scientists who talk about it use nonsensical jargon and don’t even try to explain it in rational terms. My hypothesis has been that Quantum Computing is a ruse on par with CERN, pushing toward the post-logic New Age narrative. It prepares people for a new way of thinking about reality, which is that time, space, and causality have all been conquered by science, when in reality people are just being hoaxed into psyops, or worse yet, occult spiritual activity. Nevertheless, I hoped to be proven wrong by Scott Crowder, the VP of IBM’s quantum computing division. Sadly I learned nothing new, and Crowder used the same handful of buzzwords to dance around the actual workings of the technology—if it is a real technology at all. The only new thing I gleaned was that quantum computing will help users of cloud-based services match with the most appropriate traditional server solution for what they’re doing. That’s very boring. When Crowder said the words, “It really is like science fiction,” I lost hope. Whether billionaire Arabs buy into this kind of rhetoric or not remains to be seen, however.

Great future that we complain about

An amusing observation from Dr. Kevin Knight was that people will always complain about things, even when they dramatically improve their lives. He used the example of air travel, which revolutionized the world. Today everyone complains about their flights, but it is undeniably one of the greatest accomplishments in man’s history. I found this to be a fair point.

There are many services we take for granted despite them being luxuries, and AI could theoretically be just like them. If nothing else, this helped me see the world from an insider’s point of view. When they see the fears of conspiracy theorists and skeptics, they compare it to those of Luddites or anti-technologists of the past. There can never be a utopia because humans will never be satisfied with what they get, but in their mind, the thankless job of engineers is to force change upon people in cooperation with investors who “get it.” Public support becomes less important as the great vision of the future becomes unstoppable. Summits like this are designed to reinvigorate everyone involved who may be sliding into doubts.

Catastrophic failure

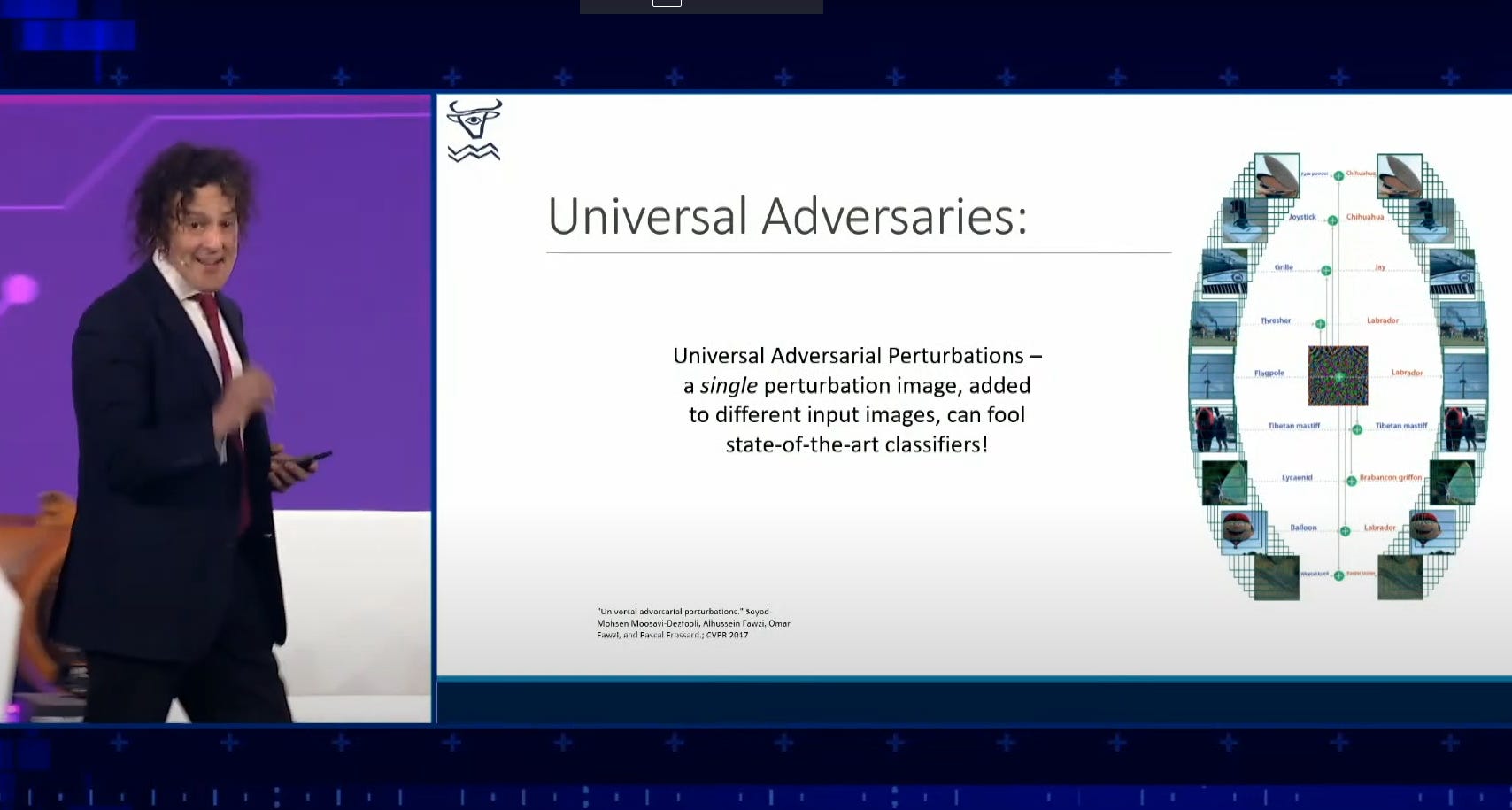

An incredible presentation by Philip Torr showed how easily AI can be tricked using subtle things like noise patterns on images, or small shapes placed strategically on the road for autonomous vehicles. To the human eye nothing has changed between one image and the other, but the algorithms can’t make any sense of it because of some strange relationship in its recognition systems.

In other words, AI is “fragile” and can be broken easily by those who know how to interfere with it. In the image above, a flagpole is recognized by the AI normally, but with an almost-invisible noise pattern overlaid it thinks that it’s a Labrador. The same goes for a hot air balloon, and a threshing machine. But the same “perturbation image” convinced the AI that a joystick was a Chihuahua. Likewise, certain images that look exactly the same and have no perturbation image are totally misunderstood by the AI simply by rotating it at a specific angle. A revolver pistol is recognized normally, but with a slight rotation is seen as a mousetrap. Color schemes can have the same effect. Correlations become inexplicable and hard to untangle, but to a human the recognition is common sense.

Imagine if a “smart city” were designed around sensors, cameras, and data-tracking systems that could be baffled and tricked so easily. What’s the difference between a man holding a pistol or a mousetrap? What if being black skinned meant the AI didn’t recognize you? You might be locked out of buildings, but also not detected by security cameras; you’d be like the Invisible Man.

This is a huge concern that I was quite impressed they brought up. Knowing that engineers are aware of these problems and working on solutions tells me that we’re still a very long way from anything resembling the Minority Report. Certainly, if Smart Cities are intended for rich and powerful tourists, these kinds of concerns would quickly scare them away.

Where did it go?

Strangely, the official channel of the Global AI Summit has been modified since I started writing this piece. The video I was watching as a reference, called the “Plenary – Day 2 English”—which is the main stage presentation, totaling about 10 hours—has been DELETED. Here is the link to the same video except not in English. I saved the video to my Favorites list, and it clearly shows a broken thumbnail and says the video was deleted by the user. Why?

Did somebody with power, such as Prince Salman himself, find something in the presentation objectionable? Was there some risk of unflattering information being spread around? Perhaps the commentary from an expert like Philip Torr in his presentation on the vulnerabilities of AI? We don’t know, but it seems like only the Day 1 English Plenary video will be taken down, which is odd.

Here are the English plenary videos for Day 2 and Day 3:

Assurances and definitions

The most important part of the Day 1 Plenary was the “AI Never” portion, which unfortunately I did not get to see because it was the last part of the video. Nevertheless, here is what the schedule promised:

Ethics was central to Day 1’s event, and it was interesting to see them tackle questions of bias, privacy concerns, unaccountability, and greed. Of course, it all ended up with assurances that lawyers and politicians would find a solution in partnership with “stakeholders” such as local communities, but at least they didn’t hide from it.

One man’s progress is another man’s injustice

We all know that technocrats and globalists are working to enslave us, but they’re doing it in the name of progress, equity, and responsible stewardship of the planet. While listening to the AI event, one cannot help but notice that even their most optimistic lies tell a story of takeover, conquest, and centralization. They don’t even pretend to care about the classic principles of liberty, prosperity, and increased standards of living. Futurists never care about the human cost of their visions. But the dictatorships seeking to utilize AI today cannot escape public outrage and even high-level opposition from investors who still have souls.

Source – https://winterchristian.substack.com/p/fear-not-humans-global-ai-summit